THE INITIAL WORKFLOW

A workflow was designed and shipped to customers without prototype testing, despite initial concerns about its usability. Budget and time constraints prevented validation before implementation, leading to a design that would later prove problematic for end users.

Initial Concerns:

From the start, there were misgivings about the workflow's complexity and potential for user error. However, budget and time constraints did not allow for prototype testing before coding.

The Shipped Solution:

The workflow was implemented and deployed to customers without user validation, setting the stage for usability issues that would later be quantified through research.

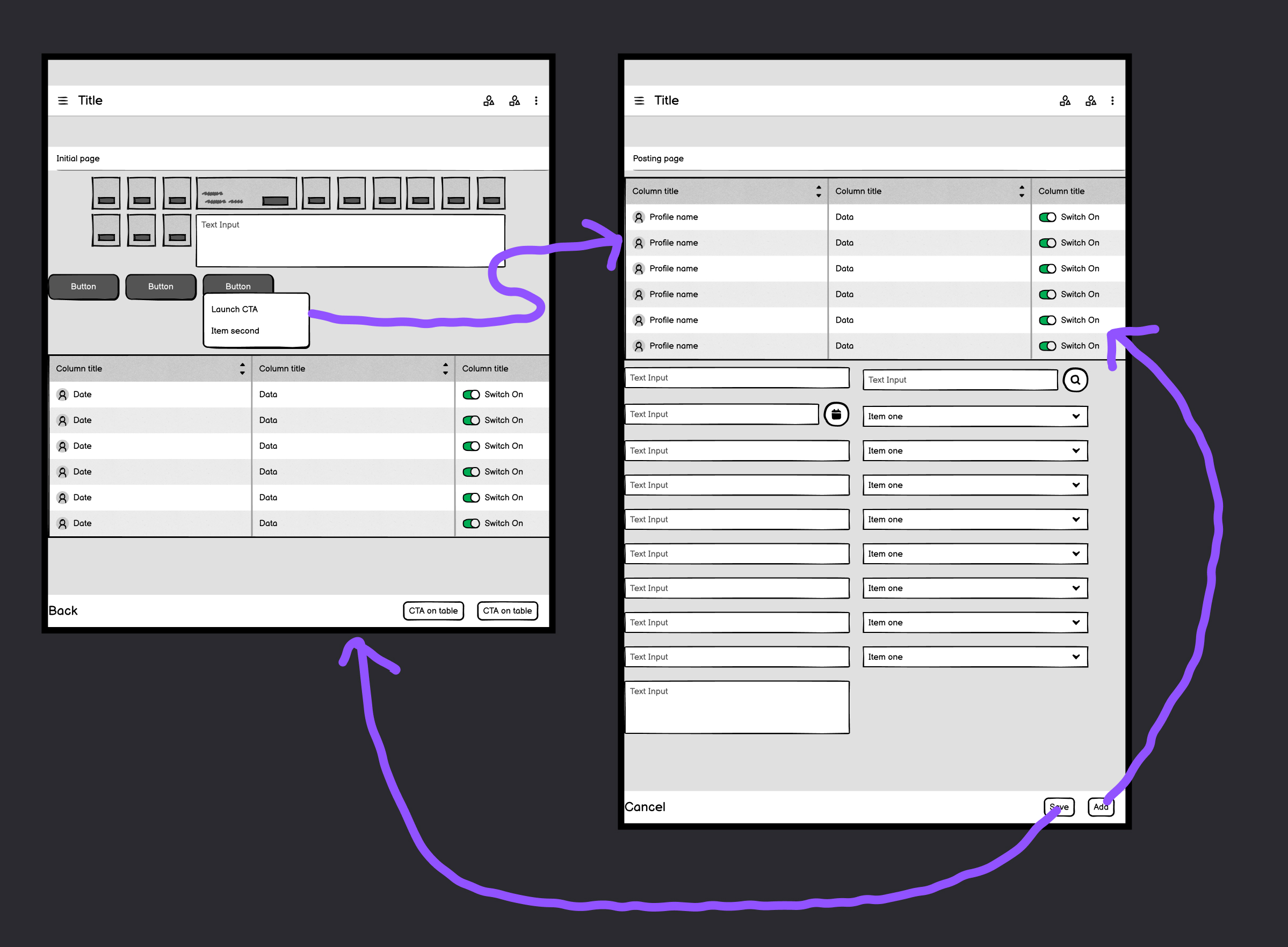

Original Workflow Flow Diagram

The original workflow design that was shipped to customers:

- The second page is launched by a hidden CTA in a menu behind a button

- The second posting page is a full page form with data to be posted staged above the form in an ever growing table.

- As the table grows, the form is pushed down below the fold.

This design would later be validated through usability testing, confirming the initial concerns about user difficulty and error rates.

ON-SITE OBSERVATION

An on-site visit revealed the user experience issues firsthand. Observing users interact with the workflow in their actual work environment provided concrete evidence of the problems and validated the initial concerns that had been raised.

On-Site Observation:

Visited the customer site to observe real-world user interactions with the workflow, identifying pain points and user difficulties as they occurred.

Key Finding:

The on-site observation confirmed that the workflow was causing significant user difficulties, validating the need for a redesign.

Decision:

Redesign the workflow with a focus on reducing user error and improving overall usability.

TWO APPROACHES TO THE SOLUTION

After returning from the on-site visit, two different approaches were designed to address the workflow issues. Initial ideas included moving the CTA up, but ultimately a side drawer solution was developed. However, the product manager preferred minimal changes to the existing UI, so both approaches were prepared for testing.

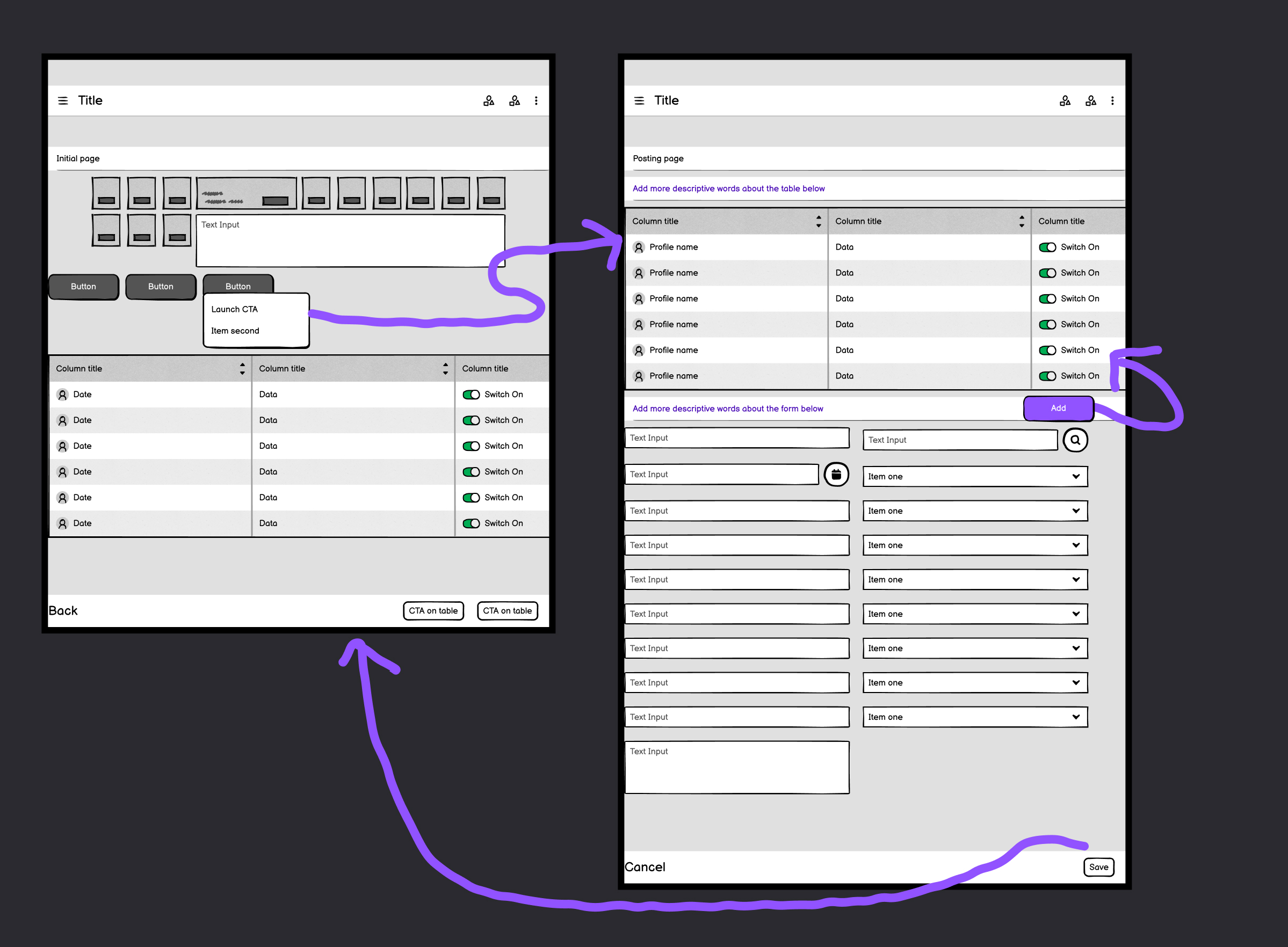

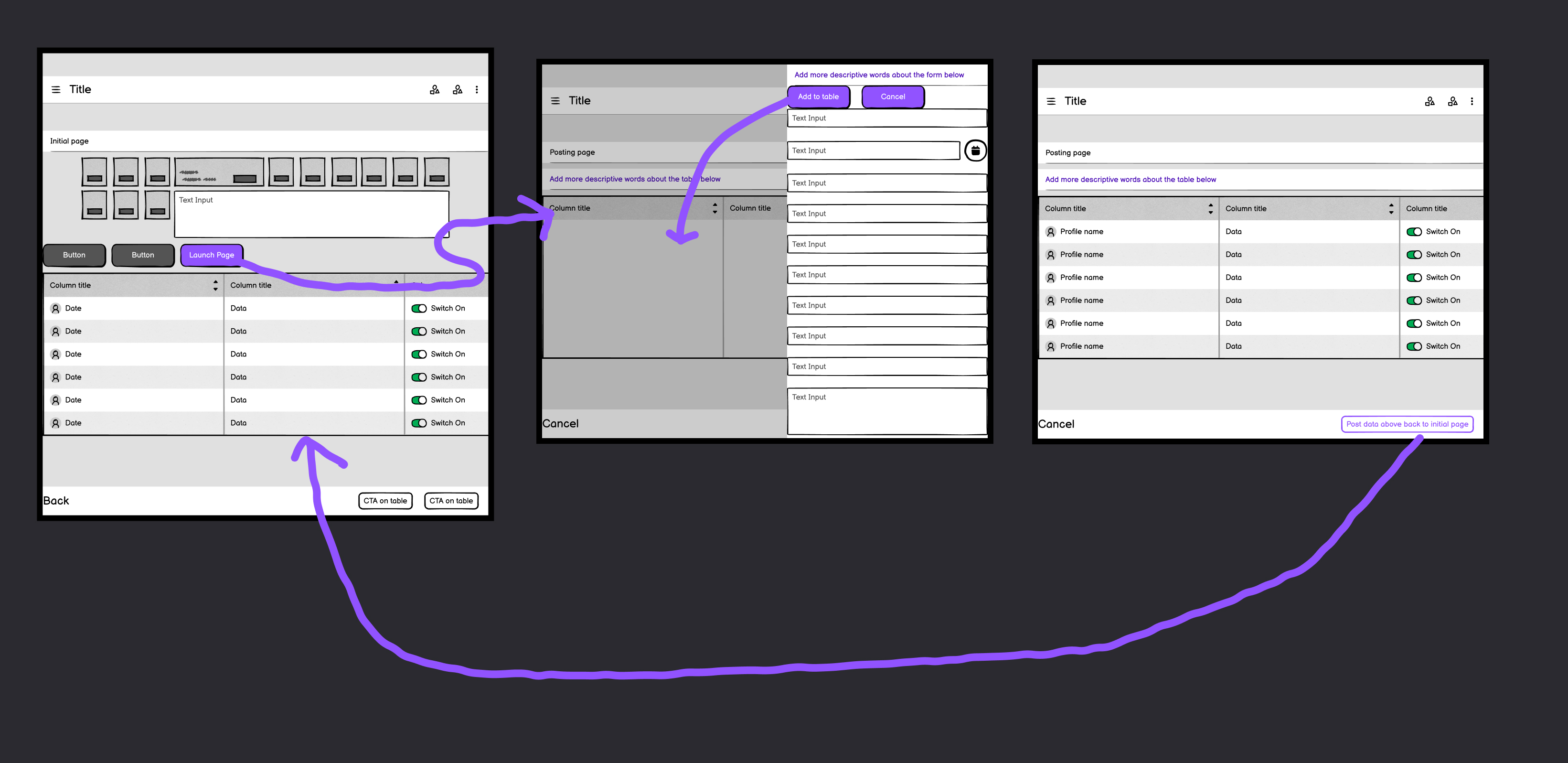

PM's Minimal Change Approach

The PM's minimal change approach maintained the existing workflow structure with only minor adjustments:

- Moving the add to table button up closer to the table

- Adding a lot more informative text onto an already busy page

Side Drawer Solution

- Introduced the "cattle chute" of the side drawer - the user had to fill out the form and was held captive on the drawer unless they cancelled

- Once the form data was added to the table, the drawer closed

- The only item on the page was the table of pending postings

- The CTA on the page alone with the table was the call to post the table data

MAZE TEST VALIDATION

After returning home, both workflow approaches were tested using Maze, an unmoderated usability testing method. This provided quantitative data to objectively compare the two solutions and determine which performed better with users.

Test Methodology:

Maze test comparing both workflow approaches with real users to measure task completion, error rates, and user satisfaction.

Results:

The side drawer solution tested considerably better, providing clear data-driven evidence for which approach to implement.

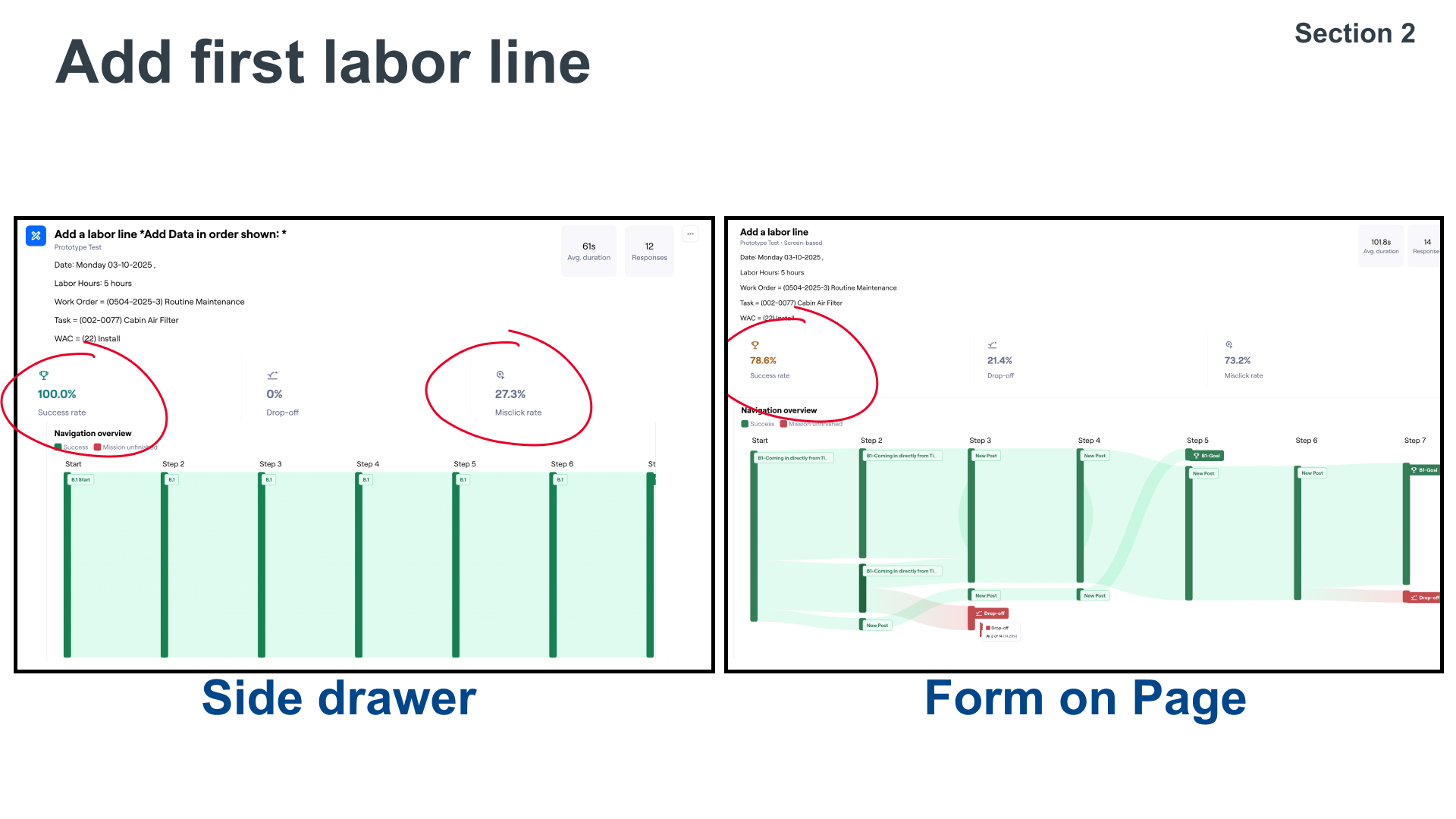

Maze Test: Workflow Comparison

The Maze test workflow analysis showed dramatic improvements with the side drawer solution. The side drawer approach achieved 100% success rate with 0% drop-off and 27.3% misclick rate, compared to the form on page approach which had 78.6% success rate, 21.4% drop-off, and 73.2% misclick rate.

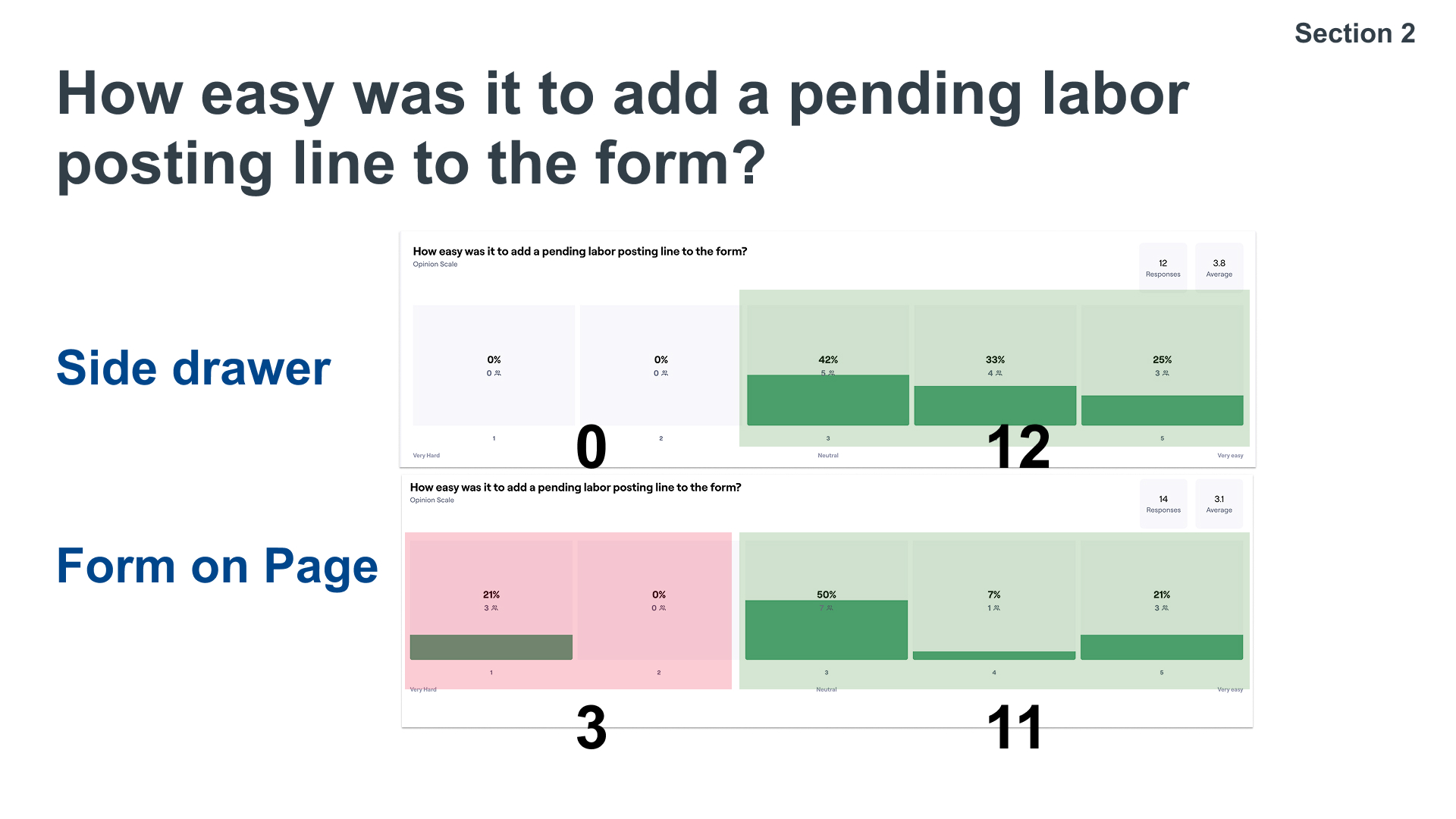

Maze Test: User Ease Ratings

User ease ratings further validated the side drawer approach. The side drawer received an average ease rating of 3.8 with 0 responses in the "Very Hard" category, while the form on page averaged 2.8 with 21% of users rating it "Very Hard".

LESSONS LEARNED

This case study demonstrates the critical importance of data-driven design decisions and the value of user testing before and after implementation. The process validated initial concerns, provided objective evidence for design decisions, and ultimately led to a better user experience.

Trust Your Instincts, Validate with Data:

Initial concerns about usability were valid, but data from usability studies provided the evidence needed to drive change.

Testing Before Shipping:

Prototype testing before implementation could have prevented user difficulties and saved development resources.

Data-Driven Decisions:

When multiple solutions are proposed, objective testing methods like Maze tests provide clear evidence for which approach to implement.

The Value of Iteration:

Even after shipping, usability studies and iterative testing can identify issues and validate improved solutions.